Unlock the Editor’s Digest for free

Roula Khalaf, Editor of the FT, selects her favourite stories in this weekly newsletter.

Meta has agreed to spend billions of dollars on millions of Nvidia’s chips in a multiyear deal, as the world’s biggest chips group tries to maintain its dominance of the market for AI data centre hardware.

The social media giant’s move to renew and expand its relationship with Nvidia comes as the chip group faces increased competition from rivals such as AMD and its own Big Tech customers, including Meta, who are developing in-house hardware.

Ben Bajarin, chief executive and principal analyst at tech consultancy Creative Strategies estimated the deal, announced on Tuesday, would be worth billions of dollars.

Meta chief Mark Zuckerberg last month announced the Facebook and WhatsApp owner would nearly double its AI infrastructure spending to as much as $135bn this year.

Nvidia, which for the past three years has been the chief beneficiary of the massive global spending spree on AI infrastructure, is facing increasingly forceful moves by its customers to reduce their dependency on its hardware.

Google, Amazon and Microsoft have all announced new in-house chips in recent months, while OpenAI has co-developed a chip with Broadcom and struck a significant deal with AMD.

Meta has also invested in developing several AI chips in-house. Zuckerberg has outlined plans to achieve a lower cost of computing by deploying its own processors, which are optimised to the company’s “unique workloads”.

However, the chip strategy had suffered some technical challenges and rollout delays, according to one person familiar with the matter.

Meta, already a big Nvidia customer, has now committed to buy Nvidia’s next generation “Vera Rubin” chips.

It is also the first Big Tech group to say it will buy standalone “central processing units” from Nvidia to run its AI models.

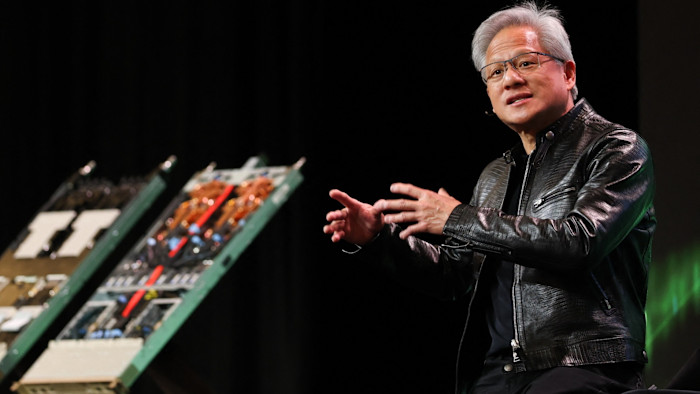

The decision by Nvidia chief Jensen Huang to offer its CPUs separately — rather than as part of a single integrated product including its graphics processing units — marks a major shift in its sales strategy.

GPUs are capable of the massive parallel processing required for training the biggest AI models. But tech groups are increasingly shifting towards “inference” workloads — the process of running AI models.

“The question of why Meta are deploying Nvidia’s CPUs at scale is the most interesting thing in this announcement,” said Bajarin. “We were in the ‘training’ era, and now we are moving more to the ‘inference era’, which demands a completely different approach.”

A number of start-ups are meanwhile offering chips specialised for inference, marking a potential threat to Nvidia’s position as the dominant AI chip provider. In December, Nvidia scooped up talent from inference chip company Groq, in a move to shore up its position in the market.

Nvidia’s stock fell sharply late last year after The Information reported that Meta was in talks with Google to buy the search giant’s Tensor Processing Units, although no such deal has been announced.

Tuesday’s announcement comes ahead of Nvidia’s earnings report next week, as investors have become increasingly jittery around AI spending.

The costs to insure against default risks have risen for Big Tech companies pouring hundreds of billions of dollars into AI investments. Prices of credit default swaps on Meta’s five-year debt traded near an all-time high at 0.59 percentage points on Tuesday before the announcement, while those of Oracle reached as high as 1.58 percentage points.

Additional reporting by Michelle Chan

First Appeared on

Source link

Leave feedback about this