NVIDIA’s Blackwell Ultra is the modern-day computing option for hyperscalers, and in newer benchmarks, the GB300 NVL72 shows immense performance in low-latency and long context workloads.

NVIDIA’s Blackwell Ultra AI Racks Now Feature Top-Tier Agentic Performance, Driven By NVLink Upgrades

The AI industry has evolved across multiple layers since its original boom back in 2022, and right now, we are seeing a major shift towards agentic computing, driven by applications/wrappers built on frontier models. At the same time, for infrastructure providers like NVIDIA, it has become increasingly important to have ample memory bandwidth and performance onboard to meet the latency requirements of agentic frameworks, and with Blackwell Ultra, Team Green has done just that. In a new blog post, NVIDIA tested Blackwell Ultra on SemiAnalysis’s InferenceMAX, and the results are astonishing.

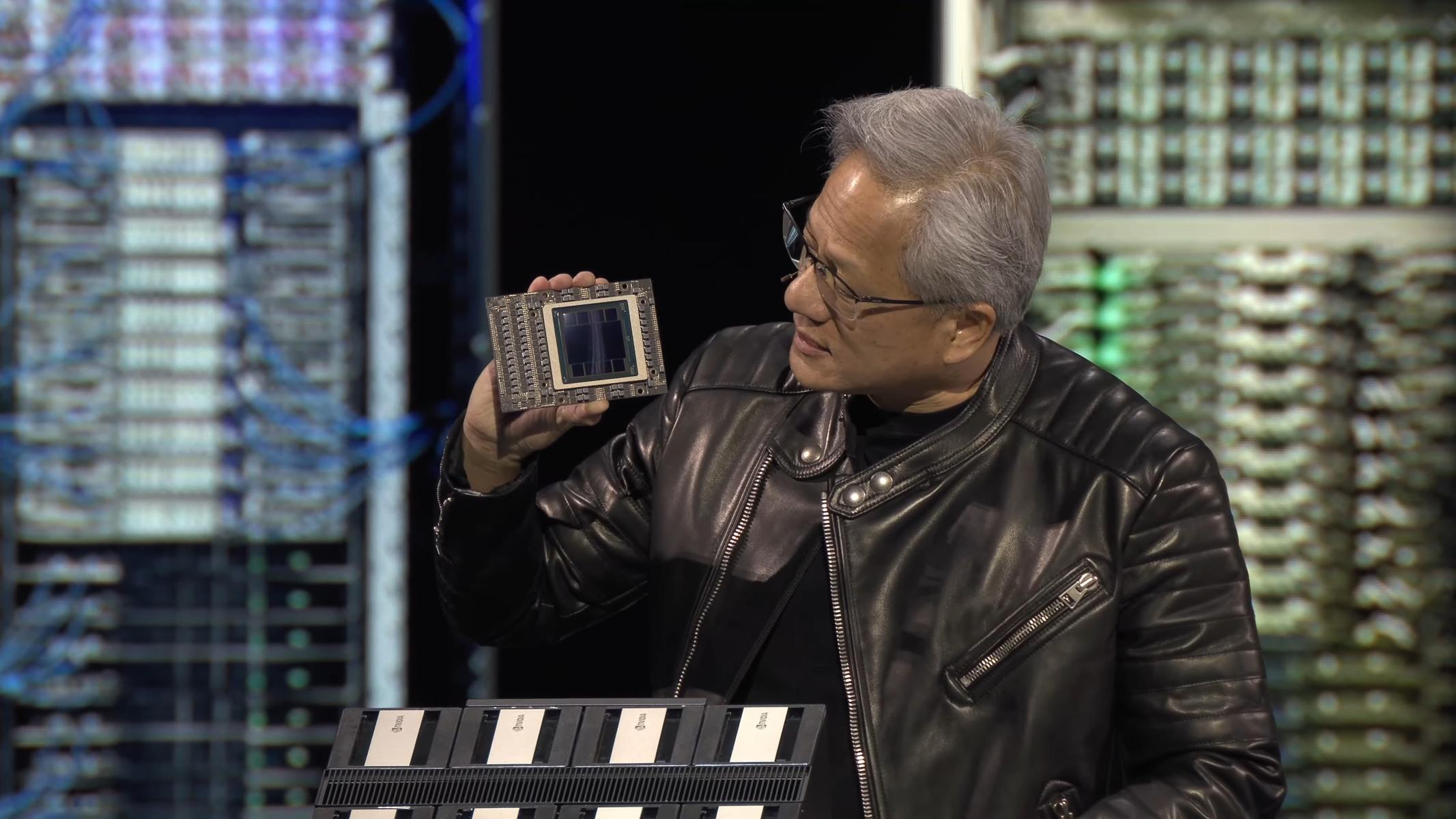

NVIDIA’s first infographic emphasizes a figure called “token/watt”, which is probably one of the world’s most important numbers to look at with the current hyperscaler buildout. The company has focused on both raw performance and throughput optimizations, which is why, with GB300 NVL72, NVIDIA sees a 50x increase in throughput per megawatt compared to Hopper GPUs. The comparison below shows the best possible ‘deployed state’ for each architecture.

If you are curious about how the throughput-per-megawatt gains are so phenomenal, well, NVIDIA takes pride in its NVLink technology. Blackwell Ultra has expanded to a 72-GPU front, joining them into a single, unified NVLink fabric with 130 TB/s of connectivity. Compared to Hopper, which is confined to an 8-chip NVLink design, NVIDIA has brought in superior architecture, rack design, and, more importantly, the NVFP4 precision format, which is why GB300 dominates in throughput.

Given the “agentic AI” wave, NVIDIA’s GB300 NVL72 testing also focuses on token costs and on the upgrades mentioned above. Team Green sees a massive 35x reduction in cost per million tokens, making it the go-to inference option for frontier labs and hyperscalers. Yet again, scaling laws remain intact and are evolving at a pace no one would’ve imagined, and the major catalysts for these performance upgrades are indeed the “extreme co-design” structure NVIDIA has in place, along with, of course, what we call Huang’s Law.

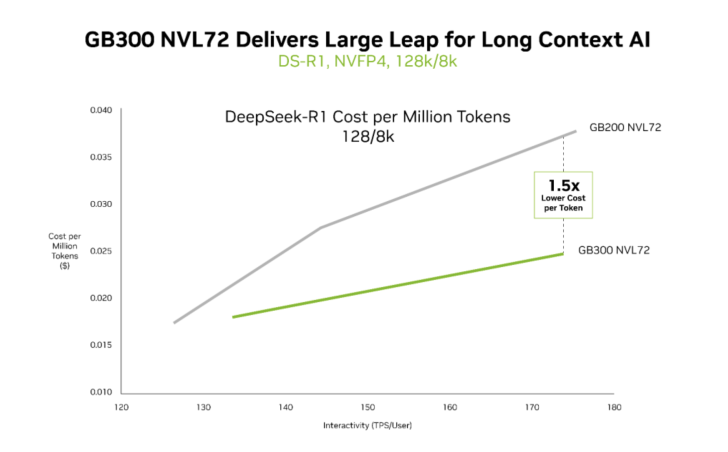

The comparison with Hopper becomes a bit unfair when you factor in the incremental differences in compute nodes and architectures, which is why NVIDIA has also compared the GB200 with the GB300 (NVL72s) across long-context workloads. Context is indeed a major constraint for agents, as maintaining a state of the entire codebase requires aggressive token usage. With Blackwell Ultra, NVIDIA sees up to 1.5x lower cost per token and 2x faster attention processing, making it well-positioned for agentic workloads.

Given that Blackwell Ultra is currently in the process of hyperscaler integrations, these are among the first benchmarks of the architecture, and by the looks of it, NVIDIA has managed to keep performance scaling intact and aligned with modern-day AI use cases. And, with Vera Rubin, one could expect even superior performance from the Blackwell generation, making it one of the many reasons why NVIDIA currently dominates the infrastructure race.

Follow Wccftech on Google to get more of our news coverage in your feeds.

First Appeared on

Source link

Leave feedback about this